Data as a service: Fast, user-friendly reporting for hundreds of billions of records

Our client is a privacy-focused marketplace that provides search integrations for advertisers and publishers to serve pay-per-click ads in response to consumers’ searches. These integrations require a reporting interface for analyzing main sources of revenue and optimizing advertising strategies.

Additionally, the system needs to be responsive even in the face of massive amounts of data. Lineate analyzed the workloads and built a new reporting architecture with a data pipeline that can seamlessly handle up to 500 analytical search queries per second, returning results within half a second.

Share:

Services:

- Data Integration, Analytics, and Activation

- Cloud Strategy and Migration

Got a project?

Harness the power of your data with the help of our tailored data-centric expertise.

Contact usShare:

Services:

- Data Integration, Analytics, and Activation

- Cloud Strategy and Migration

Got a project?

Harness the power of your data with the help of our tailored data-centric expertise.

Contact usProblem

Our client had a reporting interface for its customers that allowed them to analyze ad campaign performance. The challenge was the underlying data’s massive size: ten billion rows per day, representing second-by-second activity across many dimensions. This challenge was further compounded by the interface’s flexibility, which allowed customers to create arbitrary queries against the data.

The existing system, built on Snowflake, was able to run the queries, but they often took several minutes to complete. The system needed to be able to return results from as recent as five minutes before the query in half a second or less and with large numbers of concurrent users.In order to support such query capability, we also needed to update the operational data processing pipeline to effectively pre-aggregate the data and efficiently stream it to a scalable back end.

Usually, in big data applications, it is common to use append-only data storage. But because the near-real-time reporting needs were typically focused on the most recent activity, the current months, days, and hours needed to be constantly updated. Tuning an analytical cluster to handle this kind of data update load was a novel and difficult problem.

Solution

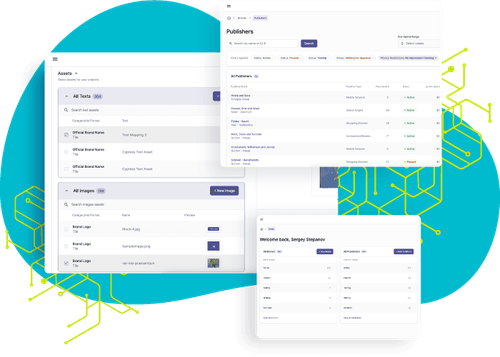

We built a cloud-based data pipeline using Lambda architecture to optimize the most common types of user queries. The pipeline feeds raw data into a ClickHouse database and maintains the data that has been pre-aggregated through Apache Spark. This new process created a massive database of every event while keeping yearly, monthly, daily, and hourly aggregates of the data in the 10 dimensions most often queried. We also rebuilt the reporting system interface to leverage this new back end and provide a highly responsive user experience.

Rebuilding the system involved first doing an in-depth analysis of usage patterns to understand the best candidates of data to pre-aggregate. For this analysis, we evaluated several data storage engines and built proofs of concept with two of them (ElasticSearch and ClickHouse).

We tested dozens of potential use cases and traffic patterns. The results of this analysis and load testing informed the final architecture.We also implemented monitoring dashboards that provide overall statistics as well as alerts and indicators of potential performance issues requiring further tuning.

Results

Every day, the data pipeline processes ten billion linked data records from upstream systems into a storage container that we designed and tuned to hold two years of aggregated data.

Previously, the system had to operate with intensively duplicated data; now, the data is deduplicated automatically when it enters the storage container.The reporting interface is running in production and supports over 500 concurrent users, providing results to queries within half a second.

Despite the huge amount of data and the heavy reporting workload, the entire data-as-a-service platform costs less than $10,000 per month to host.

Tech Stack

Our tech stack features AWS S3 with Parquet files for optimized storage, GraphQL for flexible data querying, Apache Spark for robust data processing, and ElasticSearch for fast and precise search capabilities. Alongside these, ClickHouse and Vertica elevate our analytics, all while our frontend relies on React-based microservices for a modular and efficient user experience.